I support you from audit to improvement or creation of your data architecture.

About

Data developer in all its forms: from visualization to process creation, including AI.

- Freelancer since 2018 for large companies, consulting firms, startups, and SMEs.

- Data architecture development: Creation of ETL, orchestration, cloud deployments, data valorization (visualization, machine learning, automation).

- Committed and performance-oriented: High standards for quality and results for my clients.

- Based in Montpellier, France with regular trips to Paris. Used to working remotely.

- Tech watch & sharing: Passionate about new technologies, I share my discoveries on my blog.

Skills

Data extraction and transformation

- Development of data processing pipelines ETL (Extract Transform Load) in Python.

- Implementation of tools such as DBT (SQL queries orchestration) and Airflow (ETL Python).

- Data extraction from third-party services via API interface (such as Google, Slack or Teams) and storage services (databases, FTP servers, file storage).

- Implementation of data Scraping scripts to extract data from websites

Databases configuration

- Definition with the client of the data schema with the attributes and indicators relevant to end use

- Production launch on Cloud services and security

- Development of connectors to insert data

- Writing of SQL queries

Types of databases I'm used to working with :

Datawarehouse(BigQuery), DataLake(Bucket S3, Google Cloud Storage ou FTP), PostgreSQL (relationnelle en SQL), MongoDB (document oriented), InfluxDB (time series) and Neo4j (graph oriented)

Making the most of existing data

- Dashboard creation (Data Studio, Tableau, PowerBI)

- Development of predictive algorithms (Machine Learning) in Python: Clustering, Neural networks et regressions for exemple.

- Creation of automations using NoCode tools such as Zapier, Make or N8N for well-defined use cases.

Outils de Business Intelligence avec lesquels j'ai l'habitude de travailler :

Google Data Studio, Dash, Grafana, Tableau Software et PowerBI

Création de Backend Web / API

- Development of API servers to open data from a database, for example.

- Creation of authentication via APIs such as OAuth 2.0, JWT tokens, third-party services such as Firebase or API Key.

- Migration to cloud with reverse proxies such as NGINX, Apache or Traefik to manage multiple micro services and HTTPS, among others.

Web languages I'm used to working with :

Flask in Python and Node.JS in Javascript.

Cloud Architecture

- Deploying services on Cloud services via Docker

- Network configuration of services

- Security: e.g. configuration of firewalls, IP whitelist, backup, mirroring, HTTPS, etc.

- Versioning through Git

- Setting up tests

Cloud services I'm used to working with :

Microsoft Azure, Google Cloud Platform (GCP), OVH, Amazon Web Services (AWS) and Oracle

Non-technical skills

- Definitions of business models around data and a strategy for using data.

- Training in data manipulation tools and programming languages.

- Popularising of technical subjects

Client References

Marketing data analysis with the Bulldozer Collective. Data marketing audit mission. Use of Metabase and SQL. Audit of Ads tracking and Salesforce CRM.

-

Mission carried out with the freelance marketing collective Bulldozer.

We were 4: an online ads expert, an SEO specialist, an outbound acquisition strategist, and myself for data.

Our goal was to audit the B2B marketing department to optimize conversion rates and customer acquisition costs.

For this, I had access to their Metabase connected to their data warehouse, which allowed me to perform an in-depth analysis (in SQL) of conversion rates by channel and throughout the sales funnel, as well as measure acquisition costs.

We were able to present a roadmap to the executive committee.

Audit and improvement of data processing performance. Integration into the data team (6 people). Use of SQL with DBT and extractions with Airflow and Airbyte. Datalake and Datawarehouse on AWS with Athena and S3.

-

Freelance Data Engineer.

- Audit of DBT and Airflow usage and improvement recommendations.

- Migration of SQL queries for advertising campaign data to DBT (connected to AWS Athena) with integration into the existing data model.

- Refactoring of the data model (Medallion Architecture with Kimball) in SQL with DBT. Refactoring of some Airflow DAGs.

- Creation of a datamart layer on Aurora Postgres to expose data to the backend with better latency.

- Improvement of the DBT CI/CD pipeline on Github.

Technical support for the design and improvement of advertising campaign management systems. Backend development mission (Python Flask Serverless on AWS and DB PostgreSQL) and data transformation (DBT, SQL et Airbyte).

-

Senior Backend Developer / Data Engineer Freelance.

- Technical support for the design and improvement of advertising campaign management systems.

- Serverless environment on AWS in Python / SQL via Flask and PostgreSQL. Particular attention to code quality with unit and e2e tests and a robust CI/CD chain.

- Creation of data pipelines in Python and via DBT. Set up CI/CD with terraform and Github Actions to update certain extractions using Airbyte.

- Maintenance and improvement of the backend for processing the company's financial data.

- Implementation of tools to manage margins and financial indicators.

Client based in London so mission in english. ETL development in Python on Airflow. Migration of hundreds of SQL queries (used for data transformation) to DBT. Big Query datawarehouse managament and liaison with the business teams. Creation of dashboards on Looker Studio (ex Google DataStudio).

-

Senior Data Engineer Freelance in Full Remote with travels to London and Paris.

- Maintenance and development of DAG Airflow in Python to extract data from CRM, backends, databases and several other systems.

- Migration of hundreds of SQL queries to DBT and configuration of the environment.

- Creation of a synchronisation script between the datawarehouse and the Oracle Netsuite ERP (accounting software).

- Development of SQL queries to open data in Looker Studio (ex Google Data Studio)

- Managing the datawarehouse on BigQuery and the Google Cloud Storage datalake

- Creation of dashboards and automated exports (with app script on GSheet or MAKE) for the business.

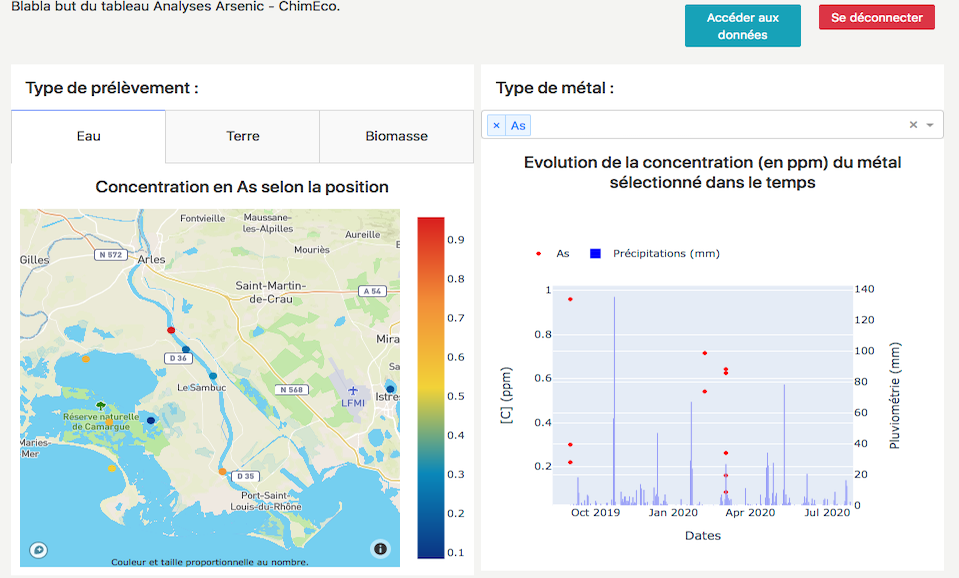

Creation of adashboard to interpret the analysis data collected.

-

Project for CNRS innovation

- Gathering of requirements and indicators to be put forward to the lab teams involved

- Data formatting and connection to external data sources (via API) for enrichment.

- Creating the platform in Python with the Dash library.

- Deployment physically within the lab to guarantee access to those involved

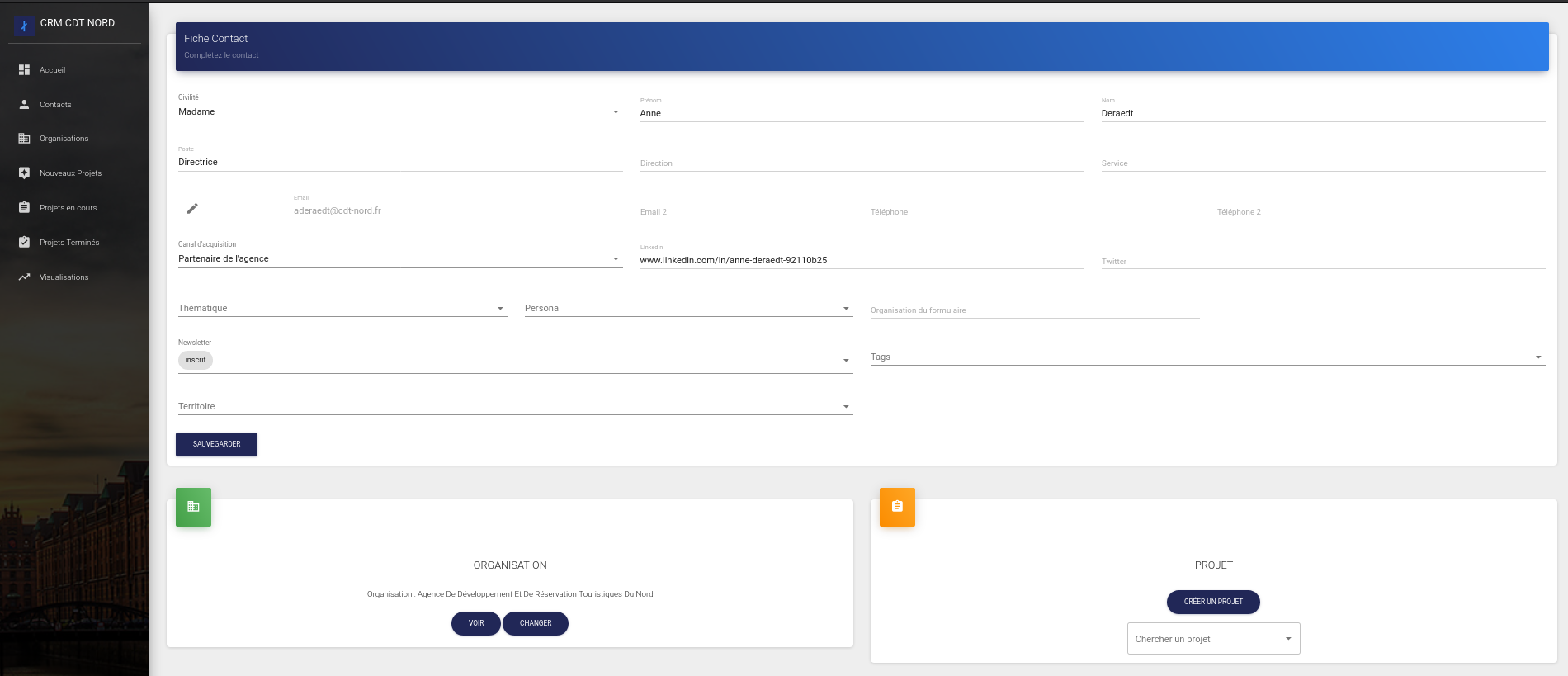

Supporting the departmental tourism committee in putting an Open Data platform online. Creation of data extractions and transformations from the agency's partners. Development of a bespoke CRM for la gestion des projets.

- Collection of data requirements within the agency.

- Creation of extraction, transformation and load pipelines from tourism data sources in Python.

- Development of a bespoke project management tool (CRM) in React.JS with an API in the backend to interface with a MongoDB database.

- Configuration of the Open Data platform using the OpenDataSoft tool and connection to the various data sources.

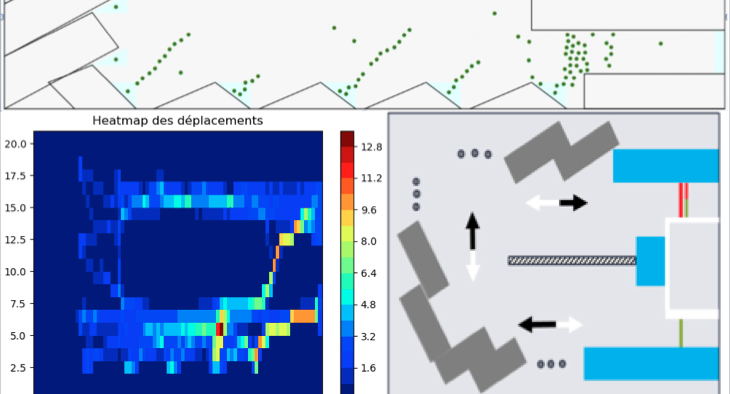

Development of simulation software pedestrian flows in waste collection centres in order to optimise skip positioning and construction.

Duration : 6 months part-time

- Simulation software in Java

- Statistical data analysis inPython

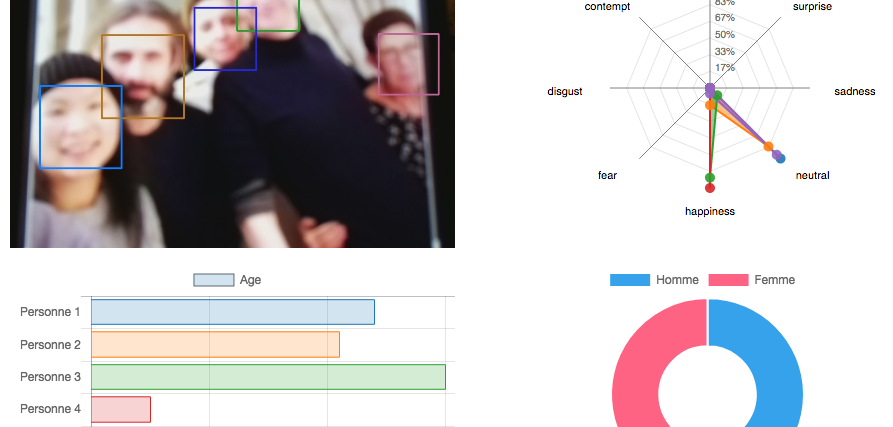

Development of data transformation and load (ETL) tunnels from Slack and Google APIs to PostgreSQL databases on Azure. Development of attention prediction models based on metadata from messages, emails, calendars and video-conferences.

-

Creation of the start-up's first technical architecture and tech strategy.

- Collection of requirements to draw up a data schema then creation of databases

- Extraction via API interfaces of Slack and Google data in order to recover all the metadata of messages, emails, calendars and visios. Creation of data transformation tunnels in Python. Configuring OAuth2.

- Putting the architecture into production on the Azure Cloud and comparative study of cloud offerings. Configuration of visualisation (Redash), automation (N8N) and data management (NocoDB) tools on servers. Configurations on Docker.

- Securing the architecture: setting up automatic mirroring and backups on the databases, database encryption, IP whitelisting, firewall configuration.

- Development of algorithms to measure user attention based on metadata.

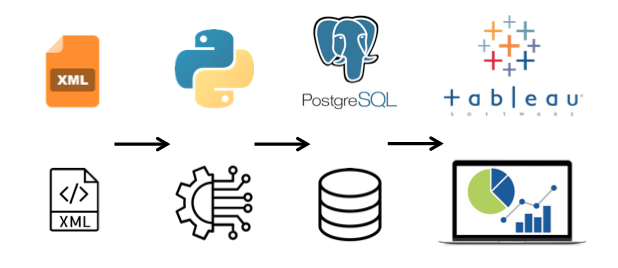

Implementation of a routine for collecting and transforming large volumes of XML files and adding hundreds of millions of rows in a PostgreSQL database for subsequent use with Tableau software.

- Development of collection and transformation scripts in Python and Bash (GB of XML files to be formatted)

- Configuration of the PostgreSQL database in development and production environments (hundreds of millions of rows to be stored)

- Daily follow-up with the client and documentation writing.

- Deployment on OVH servers.

- Data visualisation with Tableau software

Data science consulting for Onepoint group. Development of Machine Learning prototypes and definition of Artificial Intelligence use cases for their clients.

- Creation of benchmark customer documents, monitoring and research into use cases

- Development of prototypes in Python, often with a Web component, to arrive at a finished product.

- Natural language processing, computer Vision and recommendation algorithms.

- Development of servers on the Microsoft Azure cloud and Raspberry Pi

Consulting in data science and AI as Freelancer for the Big Four consulting firm Deloitte. Development of a collection and analysis architecture able to handle big volumes. The purpose was to analyse automobile market for one of their client.

- Implementation of a data collection architecture on Amazon Web Services

- Creation of scraping scripts in Python with workarounds and proxies to change IP addresses. automatically) in Python with workarounds and proxies to change IPs.

- Analysis of the large volumes of data collected

- Drafting of a report on our client's competition based on the statistics obtained

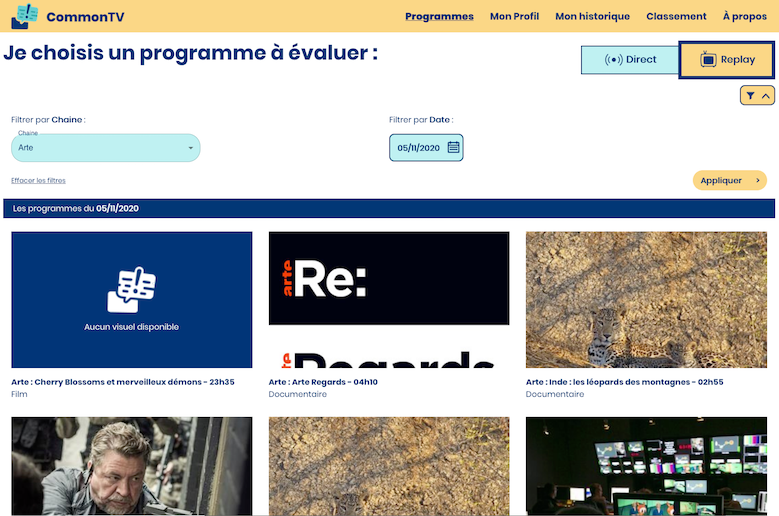

TV programme rating website for the deaf and hard of hearing. Creation of a backend server with an API interface for Unanimes in partnership with Microsoft et Bakhtech.

See more- Back-End written in Node.JS with REST API

- MongoDB database

- Deployment on Azure cloud via Git with a Docker architecture

Customer reviews

Head of Data at Mobsuccess Group

Assigned to a short mission, Florian quickly took charge of the business data and our DBT stack, enabling a rapid migration of SQL queries not yet integrated into our data models. Very good technical advice with strong business value.

Manager at Budaviz

In addition to a very pleasant relationship, Florian is conscientious, rigorous, attentive and proactive. He quickly understood my needs, namely setting up a database to group certain public data I wanted to use with Tableau software. He created several scripts to automate daily updates of the database and handled its installation on a server. He helped me choose a hosting plan for the server. The work delivered is very clean and well documented. A real talent I totally recommend.

CTO at Onefinestay (Accor Group)

Florian has been working with us for 2 years. Initially, it was for a 6-month contract, consisting in migrating our data pipelines to work with the new systems we were implementing at the time. Florian did very well, understood the business concepts quickly, our tech environment as well, meaning that the job was achieved in time, although it was not very easy with the amount of legacy transformation queries we had at the time. Since everything was going well, and that we had growing internal needs, I decided to continue working with Florian. He continued to clean up our legacy systems, and he migrated our whole ELT processes into DBT. He prepared training slides, and trained the teams internally. The job was achieved in less than 2 months. This greatly improved our ways of working, thus the internal teams' satisfaction. Florian also worked on pushing our financial data coming from our back offices to NetSuite. This was a very complex project, with multiple NetSuite customizations needed. Florian never abandoned, even though it was really difficult to get all the required information sometimes. Florian worked on many other structural and strategic projects during these 2 years, and he never let me down. The only reason we had to stop working together is because we wanted to internalize the position, but Florian wanted to remain as a contractor, which I can fully understand. Florian is a true gem, he can work on very complex projects, and go to the bottom of them, without any doubts. He is also a very nice person, which is always a nice addition :) We will miss him, for sure.

Certification Institut Mines Télécom

Certification INRIA

Experiences and Education

IT services for all types of companies

Developing projects for processing and adding value to my clients' data

See skills

See references

Start-up specialised in transport for people with reduced mobility.

- Implementing the strategy with my partners, who develop the commercial side and collect user requirements.

- Creation of the company from a legal point of view, management of accounting and administration.

Development of the information system and services :

- Development of the architecture for collecting, transforming and storing cartographic (GIS) data with opening via REST and GraphQL APIs. Deployment on 2 Cloud providers. (Architecture and Back-end)

- Programming of 3 websites with React.JS and JQuery for clients and data management tools in-house. (visualisation tools)

DELOITTE Consulting

Analytics & Information Management (AIM) business unit, which develops and implements Artificial Intelligence (AI) projects.

- Development of machine learning algorithms on text (Natural Language Processing) for automatic mail classification.

- Programming of an automatic invoice processing prototype with image to text extraction algorithms (OCR) and with the text obtained from named entity recognition algorithms. (Named Entity Recognition)

- Client assignment involving the collection and analysis of large volumes of data in the automotive sector.

Start-up specialised in hyper-spectral image capture from drones

Development of a deep learning strategy for a pattern recognition application using a miniaturised hyperspectral sensor on a smartphone.

- Use of the Python package Tensorflow

- Virtualising the data processing environment with Docker

- Drafting of a report on possible use cases for Neural Networks for the startup.

Grenoble, France

- Grande Ecole Diploma :

- Accounting and corporate governance

- Digital Marketing

- TOEIC : 915/990

- Final thesis : "The business impact of the use of data and the ethical issues involved".

Brest, France

- Engineering Master of Science in Data Science :

- Machine Learning

- Business Intelligence

- Big Data

- TOEFL : 620/677

Shanghai Jiao Tong University

(SJTU)Shanghai, China

- 6-month university exchange

- Data Science / Big Data

Preparatory classes

Math-Physics (PSI*)High school Joffre Montpellier, France